If your AI isn’t delivering, blame your data. Smarter architecture — not more algorithms — unlocks real enterprise intelligence.

After many years leading digital transformation across global enterprises, I’ve learned one truth that most CIOs discover too late: AI is only as powerful as the data architecture supporting it. Yet most enterprises are attempting AI deployment on data foundations designed for a pre-intelligent world, then wondering why their multimillion-dollar initiatives fail to deliver transformational value.

Here’s the truth every board needs to hear: Your legacy data architecture is a ticking AI time bomb. This isn’t about buying better algorithms or more compute power. It’s about fundamentally rethinking how your enterprise organizes information.

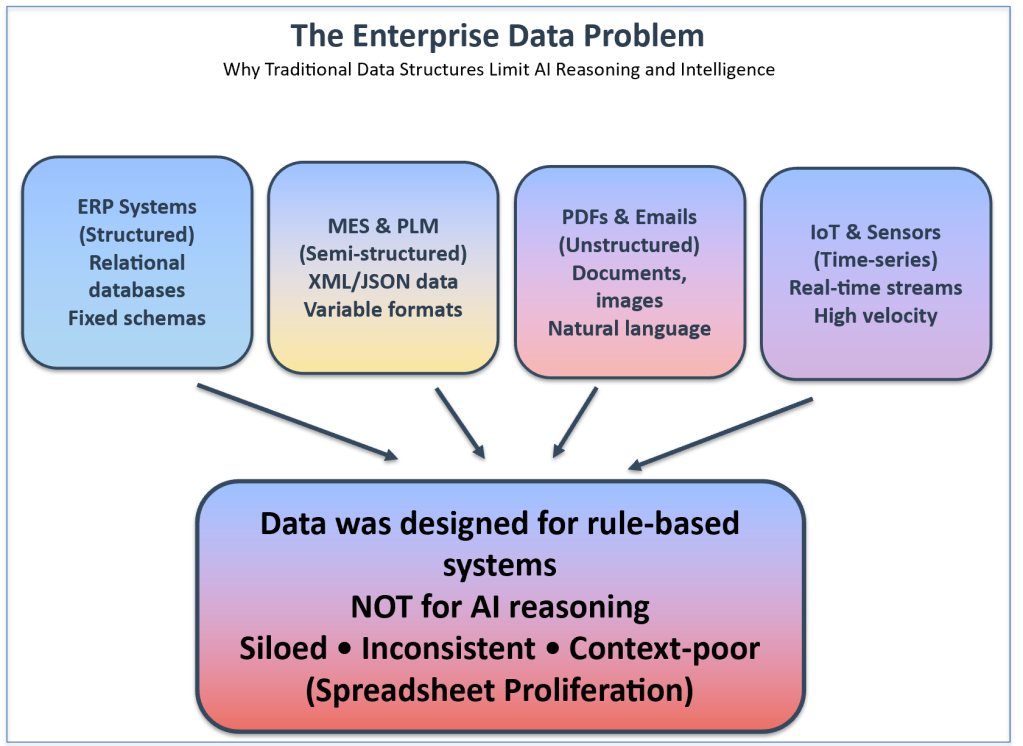

The four-stack trap killing your intelligence

Traditional enterprises operate four separate, incompatible technology stacks, each optimized for different computing eras, not for AI reasoning capabilities. It results in spreadsheets everywhere. Most Excel files are a facade on tribal knowledge, hoarding truth outside enterprise systems, creating manual workarounds and disconnected data with important processes hidden in VLOOKUPs and a maze of macros. Training your models on this duct tape foundation is why your AI hallucinates.

Raman Mehta

Your business analytics stack runs BI tools and data warehouses optimized for periodic reports to humans. The data engineering stack efficiently moves information between systems, but it strips away context. Your streaming systems handle real-time events but can’t connect the dots across business domains. The machine learning stack sits in experimental isolation, distant from actual operations.

When you try to deploy AI across these fragmented stacks, chaos follows. The same business data gets replicated across systems with different formats and validation rules. Semantic relationships between business entities get lost during integration. Context critical for intelligent decision-making gets stripped away to optimize for system performance. AI systems receive technically clean datasets that are semantically impoverished and contextually devoid of meaning.

The hidden cost of fragmentation

As organizations begin shaping their enterprise grade intelligence (EGI) architecture, critical operational intelligence remains trapped in disconnected silos. Engineering designs live in PLM systems, isolated from the ERP bill of materials. Quality metrics sit locked in MES platforms with no linkage to supplier performance data. Process parameters exist independently of equipment maintenance records.

According to analyst estimates, 80% to 90% of worldwide data is unstructured. Yet research shows that 90% of enterprise data goes unused for analytics, creating a massive blind spot in AI deployment.

These silos aren’t just inconvenient. They’re architectural barriers to AI. When deploying AI for manufacturing optimization, systems often fail to trace quality issues to root causes because the relationships between design specs, material properties, process parameters, equipment condition and supplier performance exist only in human memory, not in the data architecture.

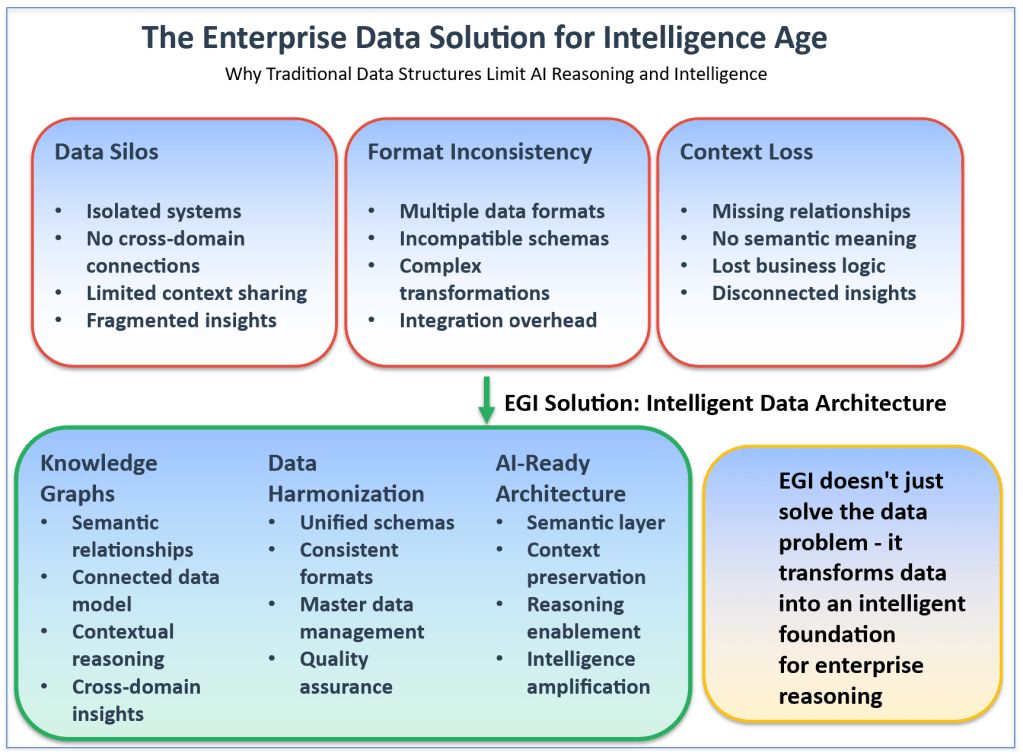

Building AI-ready architecture: Three non-negotiable pillars

Real EGI deployment requires three capabilities impossible with traditional four-stack architectures.

AI data schema design

Stop designing databases for system performance. Start designing for AI reasoning. Instead of separate tables for purchase orders, invoices and receipts, create a procurement intelligence graph that enables AI to reason about supplier performance, payment optimization, risk assessment and supply chain resilience simultaneously.

Verified answers

Unlike consumer AI that can afford probabilistic responses, enterprise AI must operate on verified, human-validated knowledge supporting critical business decisions. Every business question needs validated responses based on engineering analysis, regulatory compliance and operational experience. “What’s the optimal maintenance schedule for production line three?” must link directly to engineering-validated protocols, not statistical probabilities.

AI instructions

Raw data lacks the business context that enables intelligent decision-making. AI instruction systems include business rules that govern decisions, regulatory requirements that constrain options, strategic priorities that influence recommendations and risk parameters that guide automated actions.

Knowledge graphs: Your enterprise neural network

The breakthrough technology enabling EGI is enterprise-scale knowledge graphs that transform disconnected data into connected intelligence. At the heart of this transformation lies the enterprise ontology, which serves as the semantic backbone of the knowledge graph. Think of the ontology as your organization’s business language translator: it doesn’t just define what data exists, but codifies how business concepts relate, interact and constrain each other across your entire enterprise ecosystem.

Traditional systems treat purchase order PO12345 as an isolated database record. Knowledge graphs connect it to blanket agreement BA789, link to supplier rating A+, relate to material quality score 98%, connect to production schedule requirements and integrate with cash flow priorities. This semantic richness enables AI to reason about procurement decisions while considering supplier reliability, material quality, production impact, financial optimization and risk management — all simultaneously.

Research from Ventana shows that among enterprises with properly governed data lakes, 41% reported gains in competitive advantage, 37% noted lowered costs, 35% enjoyed improved customer experiences and 33% believed it helped them respond better to opportunities and threats.

The manufacturing intelligence graph connects engineering designs to bills of materials, process parameters, quality metrics, equipment condition, maintenance requirements and performance optimization. The supply chain intelligence graph links supplier contracts to delivery performance, quality assessments, risk factors, backup suppliers and continuity planning. Each connection represents verified business relationships enabling AI reasoning across domains rather than within isolated silos.

Enterprise language models: Beyond generic AI

While Silicon Valley builds general-purpose LLMs, EGI requires enterprise language models (ELMs) to understand your industry context, regulatory requirements and organizational priorities. These aren’t simply fine-tuned versions of consumer AI. They’re purpose-built intelligence systems designed for specific enterprise domains.

The manufacturing ELM understands relationships between material properties and process parameters, recognizes quality patterns indicating specific failure modes, comprehends supply chain risks and mitigation strategies and interprets regulatory requirements across multiple jurisdictions. The financial ELM reasons about cash flow optimization, budget allocation, risk assessment and compliance requirements within specific operational contexts.

These ELMs integrate directly with knowledge graphs, providing contextual intelligence that generic AI cannot achieve. They understand not just what data represents, but how it should be interpreted within specific business contexts and what outcomes align with organizational objectives.

Raman Mehta

The implementation reality

Transformation from fragmented data stacks to EGI-ready architecture follows a systematic progression spanning 12 to 18 months.

Phase one involves a comprehensive data architecture assessment: inventorying structured and unstructured sources, mapping entity relationships, identifying critical workflows and assessing integration capabilities.

Phase two focuses on knowledge graph foundation: designing enterprise semantic models, implementing data harmonization, creating verified answer bases and establishing AI instruction frameworks. This phase typically requires six to eight months and represents the most technically challenging aspect.

Phase three activates intelligence capabilities through AI reasoning engine deployment, agent-driven workflow implementation, governance framework establishment and systematic scaling across domains. Semantic models and knowledge graphs aren’t luxury items anymore. They’re the fuel of your agentic ecosystem.

The competitive reality

IDC’s latest research indicates that unstructured data is growing at 33% annually and will make up 22% of the total global datasphere, driven largely by IoT and edge devices. Organizations that fail to architect for this reality will find themselves increasingly unable to compete.

Enterprises solving the data architecture challenge gain sustainable competitive advantages. AI deployment timelines are measured in weeks rather than months. Decision accuracy reaches enterprise-grade reliability. Intelligence scales across all business domains. Innovation accelerates as AI creates new capabilities rather than just automating existing processes.

When your enterprise becomes EGI-enabled, the nouns and verbs act in harmony. A modern data lake is the noun — it tells what entities you have and what relationships they share. Agents built on top of the model context protocol are the verbs — they orchestrate your enterprise.

The strategic question every board must confront: Are we building AI on a foundation designed for intelligence or trying to mount rocket engines on horse-drawn carriages? The enterprises that answer correctly and commit resources to systematic transformation will define the competitive landscape for the next decade.

This article is published as part of the Foundry Expert Contributor Network.

Want to join?